As video traffic on the Internet increases each year, and with the continual rise in viewer experience and quality expectations, the need for streaming services and video platforms to deliver higher resolutions at ever-increasing quality has never been greater. This leads to the requirement for more efficient video codec standards and better methods to assess the resulting video quality. Although many video quality evaluation methods exist, the most widely accepted are PSNR, SSIM, and VMAF.

This article will discuss the concepts behind how each of these quality metrics functions with a focus on PSNR avg.MSE and PSNR avg.log compared to VMAF and based on real-world experiences from our internal experiments and those of more than 50 customers. Here is an overview of the three most common objective quality metrics.

PSNR (Peak Signal to Noise Ratio)

PSNR is a numerical representation of the ratio between the maximum possible power of the signal (original frame) and the peak signal of the noise (compressed frame). PSNR compares the difference in the pixel values of two images and is commonly used to measure the quality of lossy compression codecs.

PSNR is an attractive option for the following reasons:

-

- It is simple to compute, making PSNR more usable in real-world applications.

- PSNR has a long history of usage, making it easy to compare the performance of new algorithms to those previously evaluated.

- PSNR is easy to apply for the use case of encoding optimization.

When comparing video codecs or encoder implementations, PSNR approximates the human perception of reconstruction quality. Typical PSNR values in lossy image and video compression are between 30 and 50 dB if the bit depth is 8 bits (higher means better quality). For a video sequence, there are two primary ways to calculate PSNR, PSNR avg.MSE and PSNR avg.log.

PSNR avg.MSE gets calculated by computing the arithmetic mean of the MSE first and then taking the log.

PSNR avg.log is computed by calculating the PSNR of each frame and then calculating the arithmetic mean of all video frames. Multiple testing shows that PSNR avg.log is less accurate because it weights the final score toward frames with higher quality.

Example: If we have a video sequence with two frames, the first with a PSNR score of 99dB and the second with a PSNR score of 50dB, the visual difference will be subjectively indistinguishable. However, calculating the PSNR avg.log with a frame of PSNR=99dB will increase the final average score, giving the false impression of the sequence being subjectively higher quality.

The HVS (human visual system) is highly sensitive to frames with subpar quality compared to the rest. When watching a video with a poor-quality frame, the human eye will recall this frame despite representing a low percentage of the total encoded frames. PSNR avg.MSE is weighted toward low-quality frames, making the calculation better aligned to how humans “see” video in motion.

SSIM (Structural Similarity Index Measure)

SSIM is a full-reference image quality evaluation index that measures image similarity from three aspects: brightness, contrast, and structure. The value range of SSIM is [0,1], where the larger the value, the smaller the image distortion. SSIM gets compared to other metrics, including PSNR, MSE, and other perceptual image and video quality metrics. In testing, SSIM often outperforms MSE-based standards.

SSIM is an excellent choice for applications like:

- estimation of content-dependent distortion,

- capturing and measuring the impact of noise,

- capturing blurring artifacts.

SSIM is unique in its ability to measure the subjective loss of coding. For example, when encoding using x264 with SSIM and with AQ (adaptive quantization technology) turned off, x264 will use a lower bit rate for smooth areas containing minor detail. AQ better allocates the bit rate to each macroblock. PSNR and VMAF incorrectly score the actual quality, whereas SSIM better correlates with subjective viewing.

What is probably clear by now is that there is no single “winning” quality metric, and even with SSIM’s positive attributes, there are specific applications where the metric may fail, such as:

- assessing the quality of Super-Resolution algorithms,

- detecting and capturing spatial and rotational shifts,

- capturing changes in brightness, contrast, hue, and saturation.

VMAF (Video Multi-Assessment Method Fusion)

Video Multimethod Assessment Fusion (VMAF) is an objective full-reference video quality metric developed by Netflix with the University of Southern California, University of Nantes IPI/LS2N lab, and The University of Texas at Austin Laboratory for Image and Video Engineering (LIVE). VMAF is unique because it predicts subjective video quality based on a reference and a distorted video sequence.

VMAF is becoming a popular choice for the evaluation of quality when comparing different video codecs, video encoder implementations, encoding settings, and transmission standard variants. VMAF addresses the video evaluation situation in which traditional quality metrics cannot fully reflect all scene characteristics.

Video quality enhancement is a just-needed application of quality metrics. However, PSNR and SSIM are commonly used and simple to calculate but do not fully reflect the subjective sentiment of the human eye and thus provide limited value for real-world applications. This is where VMAF shows a tremendous advantage, as illustrated below.

The comparison shows that the image on the right has been enhanced to reveal additional detail, so the smaller characters are sharper while the VMAF score is greatly improved. But is this resulting VMAF improvement correlated to a better match for the original quality? It turns out that VMAF can be tricked, some use the word “hacked.” Although the VMAF value has been raised, image quality was not improved.

Take the following picture as an example; the video on the right was optimized for contrast enhancement only compared to the video on the left. The resulting VMAF score of the left video is 67.44, while the VMAF score of the contrast boosted video on the right is 97. Though the score was boosted 30 points, when we subjectively examine detail in the image, we discover that the image quality has not been improved as the original mosaic pattern and noise still exist.

Although VMAF has flaws, it is a valuable and, in some cases, superior quality metric. For example, the right image above without deblocking showing mosaic artifacts compared to the left image that uses deblocking scores lower because of the visible blurring. Since VMAF can account for the two parts of image quality enhancement and degradation relative to the source, it is gaining considerable usage in video streaming.

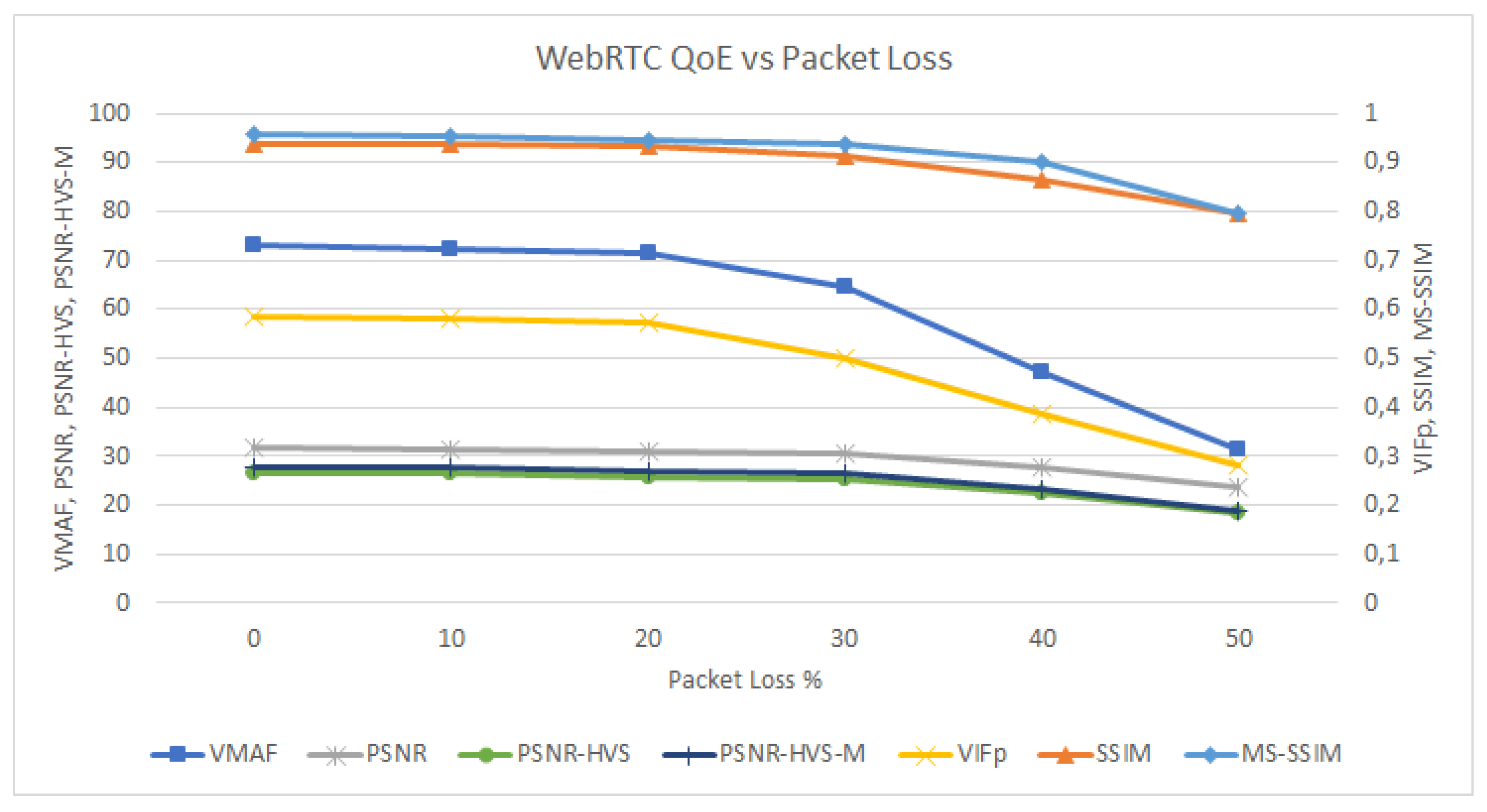

The main point of this article is not to appoint a winning quality metric. What’s important is to select the evaluation standard, whether objective or subjective, that most closely fits the type of measurement desired and the application for the testing. Making decisions looking at a single data set will be prone to error. For this reason, our suggestion is to utilize VMAF combined with PSNR and SSIM to achieve the evaluation effect closest to what the human eye will perceive.

The goal of Visionular has always been to improve image quality while pushing the bounds on performance without losing the compression advantages of the standard. Our team wakes up every day to do this. It’s a hard job that comes with great rewards as we see some of the world’s largest video services and platforms delight their customers with our video encoding solutions.

[“source=visionular”] Techosta Where Tech Starts From

Techosta Where Tech Starts From